Docker cheatsheet

If you came here because you liked Moby Dick inspired cover image - spoiler alert. It's not about whales really, it's about contenerization software called Docker using whale as its mascot.

Docker became de facto standard used in IT industry in the last decade. It encapsulates code into units called containers and allows to run it on any environment. Docker makes development way easier. Setting up dev environment takes just a few commands instead of installing several apps locally that might differ across platforms. Same goes for deployment. Scaling your up (adding more instances) and creating CI/CD pipelines become way simpler.

In teams I worked so far, Docker was already set up so I never actually had the need to figure it out. When some CI pipline errors emerged I had to rely on DevOps’s help to navigate project docker files. It’s good for both frontend and backend devs to know at least the fundamentals. This post aims to introduce Docker by a practical example.

There are three fundamental concepts we need to know ahead 💡

- Dockerfile it’s an instruction file that describes how your image should be created. It can be pulled from cloud repository like dockerhub or you can create it from scratch. Check out Dockerfile docs

- Image is based on dockerfile and its a snapshot of your software with all of your dependencies

- Container we use images to spin up multiple instances of your app

ABC of a Dockerfile

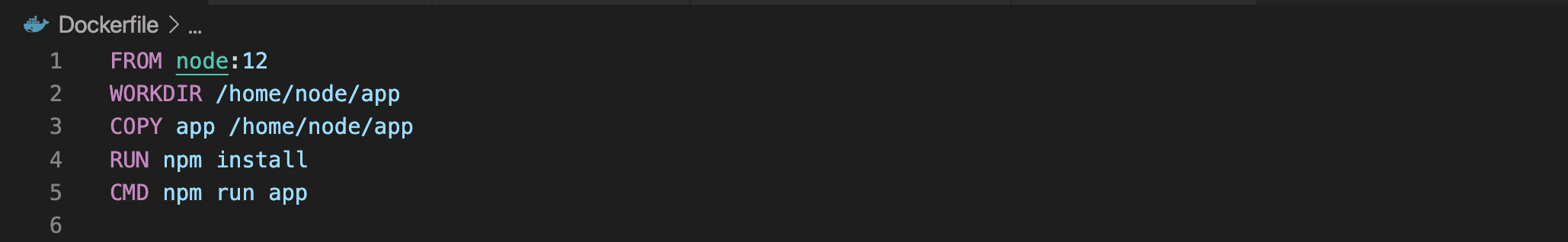

Dockerfile looks like this:

It uses a set of instructions (keywords listed in Docker's docs) to build an image that then will be used for running our container 📋

- FROM - sets a base docker image - here we are using node v12 available on dockerhub

- WORKDIR - sets working directory for instructions that follows

- COPY - copies new files and directories and adds them to the filesystem of the container at the destination path. It will ignore files included in .dockerignore file similarly to how .gitignores work

- RUN - this instruction executes passed commands and the resulting image will be used in the next step. Here we are installing packages residing in package.json using npm

- CMD - used to start our app using script from package.json that runs “node index.js” in our case

- EXPOSE - informs Docker that container listens on specified network port, you can also change protocol from default TCP to UDP

Creating an image 🐳

After our Dockerfile is ready we need to build an image. To do that we run command in directory in which Dockerfile was created:

docker build -t simple_node .

To list all existing images we can run command:

docker images

⚠️ Important thing to keep in mind is that every time we run an image we will create a new one and as you can see there are pretty heavy on memory! Images can easily go over 1GB. So make sure you get rid of unused images.

🟢 If an image was successfully built we can run it with this command:

docker run --name nodeapp -p 9999:9999 nodeapp

Argument -p maps container port to actual machine port so it exposes it to the outside world. You can also run an image in a detached mode (so it doesn't block your terminal) by adding -d flag.

To see the list of containers and their statuses run:

docker ps -a

flag a shows all containers instead of only the running ones

🛑 To stop or kill containers by their name or id you will find in the output of docker ps. In this case i am using container named nodeapp

docker stop nodeapp

The difference is that under the hood docker sends a SIGTERM or SIGKILL signal to terminate the process. Stop allows to finish the process gracefully and kill causes immediate exit that might sometime cause troubles. One other way to stop container is to remove it from local storage by running

docker rm nodeapp

You can run multiple instances of your app based on your image on multiple machines, on VPS server or Cloud, literally anywhere you want. In the next post I will try to containerize rudimentary node.js app and put it behind a load balancer based on haproxy image with a little help from docker-compose which is a tool that simplifies spinning multiple containers with one command.