How to scale a web socket app?

WebSockets is a communication protocol built on top of HTTP in 2008. Now it's a standard and all browsers support it for about a decade. What is so specific about it is the fact that unlike request/response communication patterns it allows for creating connection over TCP(ports 443 or 80) that is kept alive, it opens a channel so to speak between client and a server and allows for streaming data between them. Most popular use case? Chat.

WebSocket connection 🤝

You have probably heard at some point about a handshake in computing. Initialising connection in HTTP protocol starts with a “three way handshake”. Client sends SYN signal to server, server acknowledges the connection with SYN-ACK message and then client replies with acknowledgment (ACK). It’s as meeting a friend you would say “Hi Stas, Can I talk to you?” Stas would reply: “Yes, we can talk”, and then you would say something like : “It’s very nice that you allow me to talk to you”. And only then would conversation start.

WebSockets handshake is a little bit different. It uses HTTP UPGRADE header that signalises initiating web socket connection.** Later on you will see it in dev tools!

The problem ❓

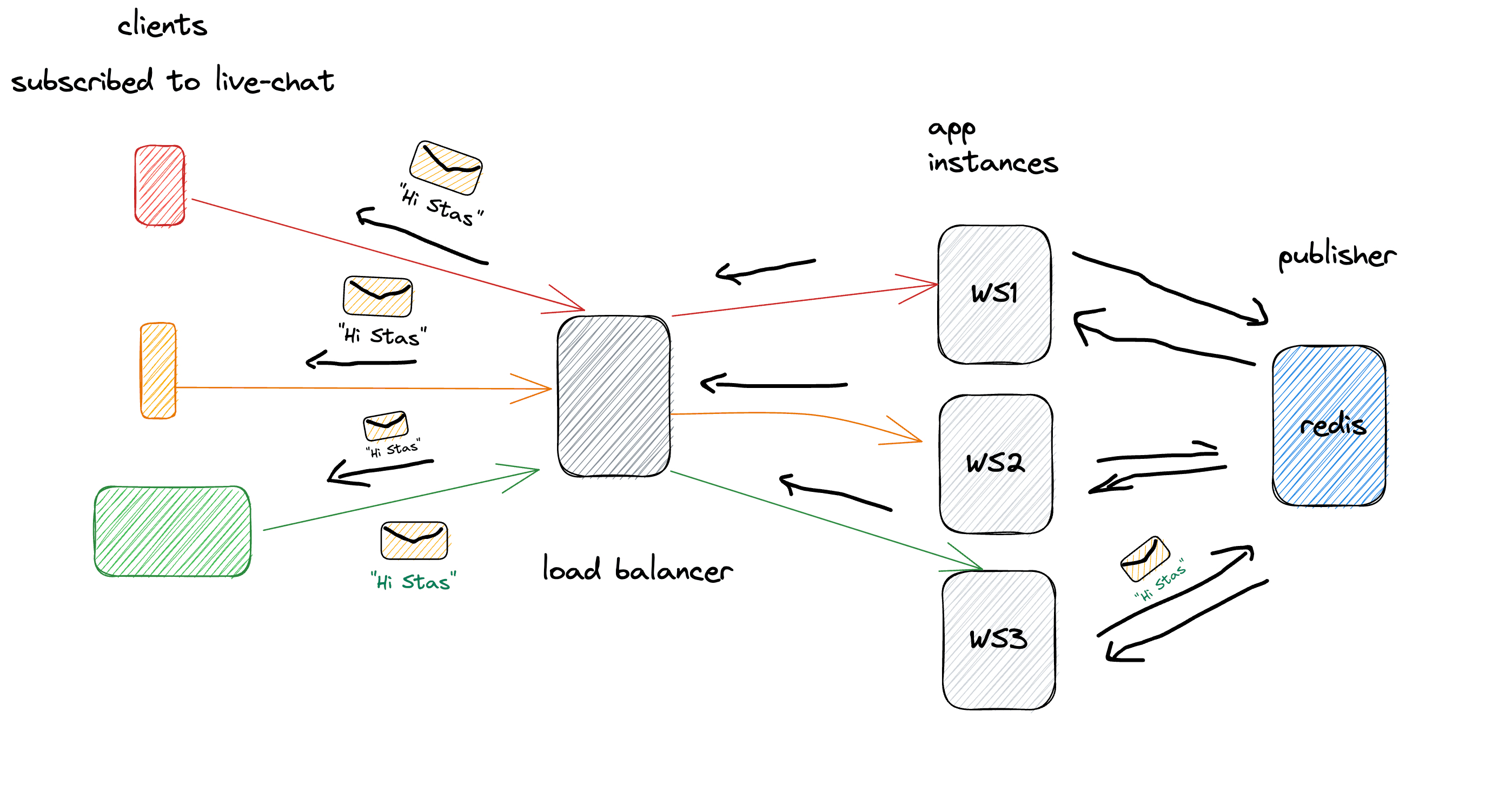

Since I’ve also been playing around with docker recently - I wondered how to scale this kind of simple chat application if the connection is stateful? How can we persist clients' connection to specific server instances and have all chat users receive messages even though they are connected to separate instances that don’t know about each other?

First of all - I will use dev tools as client to start a connection and send messages. I will use docker-compose to spin up load balancer using haproxy image, several of node chat apps and finally redis as a pub/sub broker.

This should work like this:

💡: Redis is a very popular in memory data store often used for caching costly/slow database queries. Load balancer is sort of a gate that divides incoming messages to different server instances. I highly recommend reading this explainer that uses animations to illustrate different balancing strategies.

Docker Compose 🐋

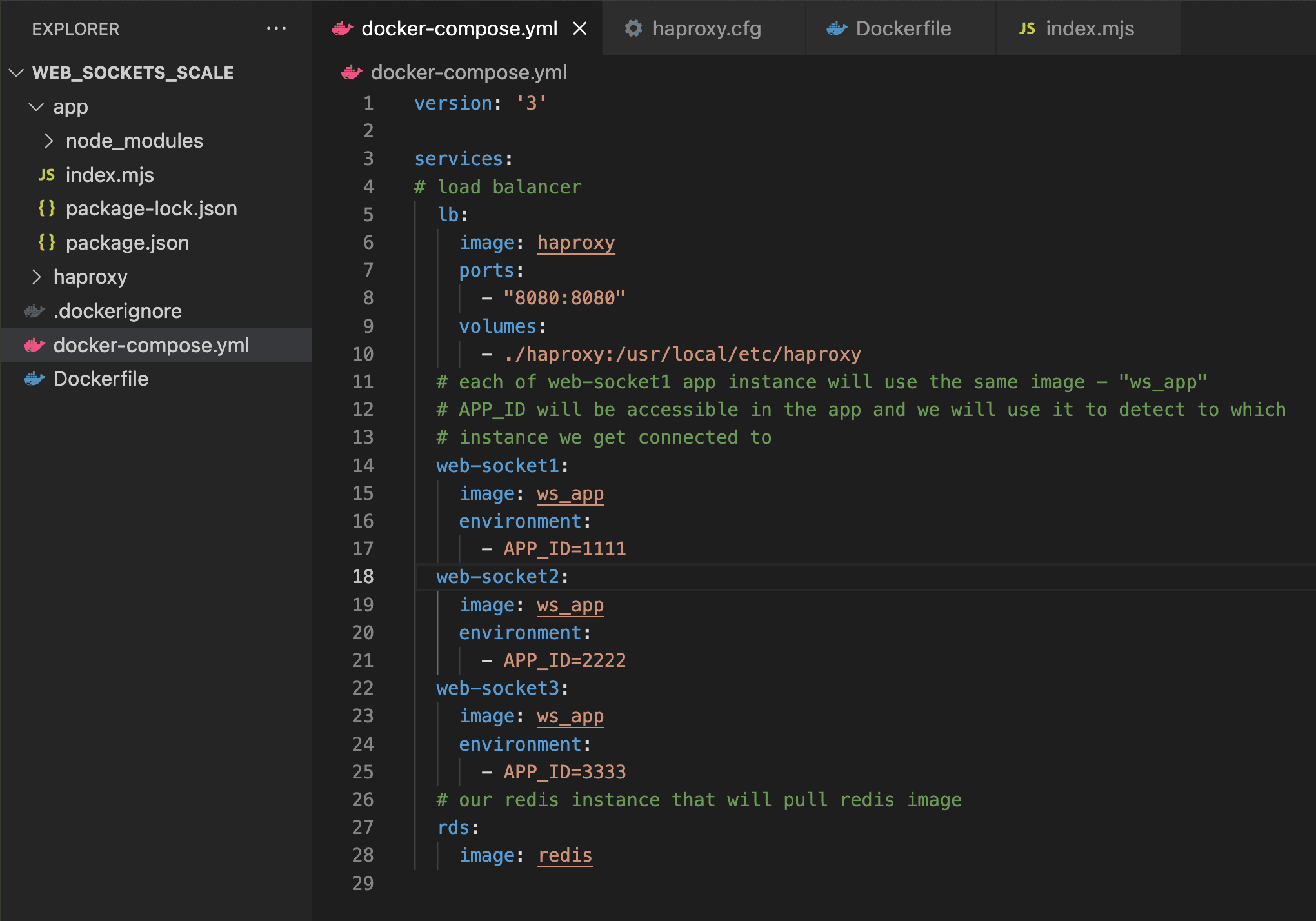

Now I need a way to spin up several instances of my app and other elements of the infrastructure. We will get to the code part later on.

Firstly we need to create a docker-compose.yaml file, you give to docker and it sets up everything from this instruction including list of docker images.

⚠️ I ran into problems with haproxy.cfg file. It would cause load balancer container to exit on docker compose execution. After short investigation the error turned out to be a missing line at the end of config.

This is a command I used for testing the config syntax

haproxy -c -f haproxy.cfg

I added a missing line with:

echo ““ >> haproxy.cfg

Another thing to remember is that you need all your images ready, so if you created your own Dockerfile to websocket-app you need to build it where Dockerfile resides like this:

docker build -t [your_image_name] .

Now to use your instructions from yaml file and start your containers run

docker-compose up

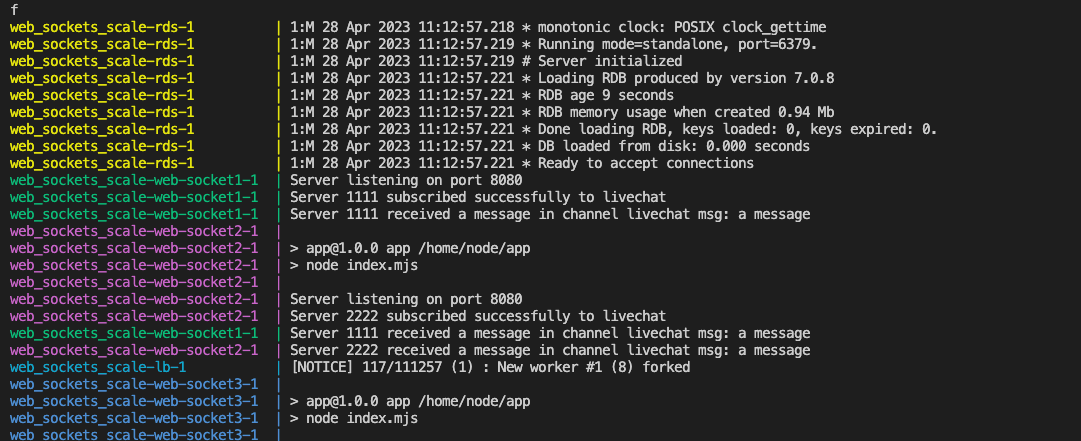

It should look something like this in your terminal if everything went fine 🥳

Implementing webSocket server in Node.js 🚧

In this project we are using 'websocket' library to create a ws server in node js. To create connection to redis on port 6379 we will use node redis library. We also need APP_ID from process.env, remember? That’s passed in docker-compose.yaml and will be different for each node app instance! Also we will keep a list of web socket connections for sending messages.

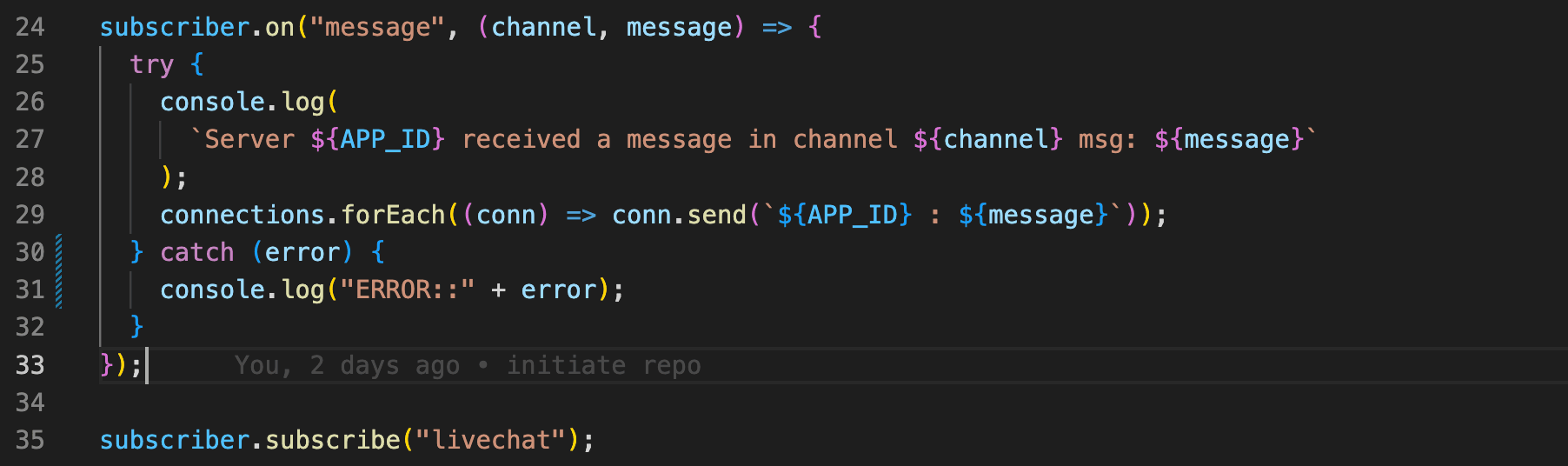

We will use publish subscribe model in redis. In short publisher will be sending a message to a channel and all the channel’s subscribers will receive it. One important thing. We will need 2 instances of redis client : one for subscriber and one for publisher.

There are many alternatives to using redis for that purpose. RabbitMQ and Kafka are a dedicated software for handling messages in pub/sub pattern.

First let’’s make subscriber listen to “subscribe” event. On subscription publisher will publish a message to a channel we called “livechat”. Then subscriber subscribes to live chat channel, so when we start app instance it already sends a test message and it gets published.

Now. Whenever message is sent to channel, for each connection in connections array -> and this is how all chat clients get message whenever it’s sent by each server instance.

We need to create a raw http server that we will then pass to WebSocketServer instance. We also need to listen for incoming messages.

On each new web socket connection request we create a con(connection) that on each message will publish to the livechat channel. Then we push this connection to array of connections that then will be used to propagate the messages.

Testing our app

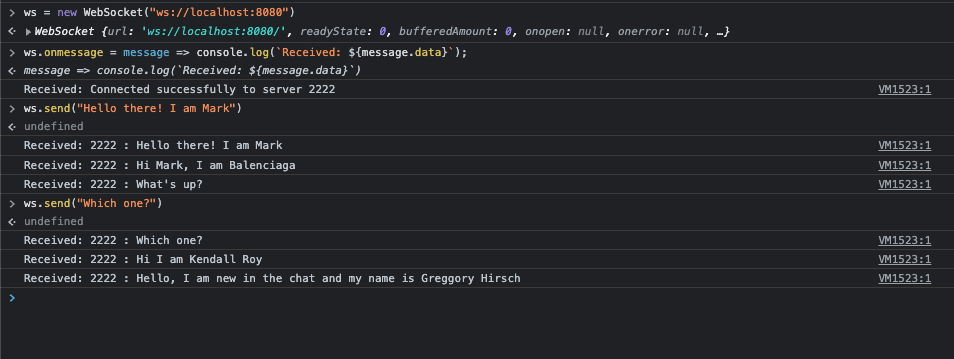

Now we can start testing our web socket app scaling. To do that we can open several dev tools in browser. So clear the console and let's begin

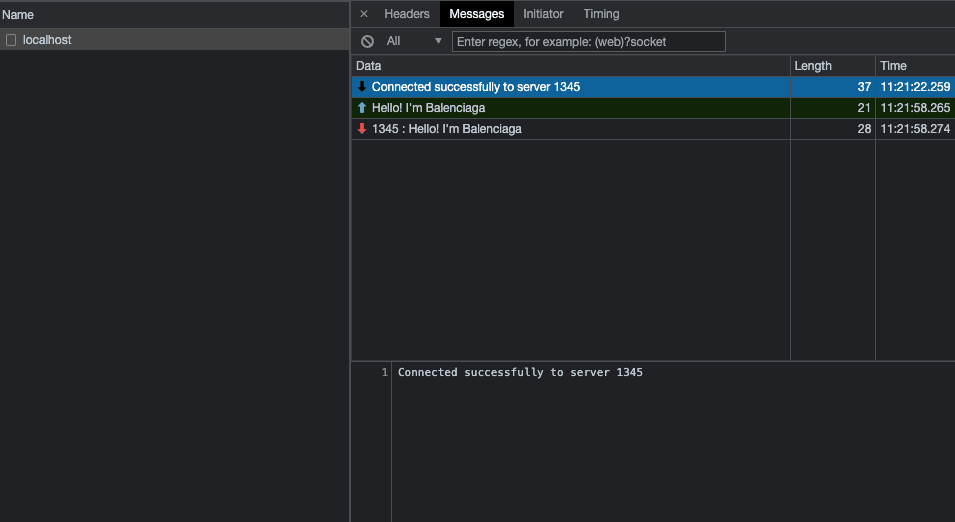

First we initiate ws connection. Notice how we are using ws:// instead of http://. For encrypted connection we would use wss://. If we go to network tab in dev tools we can track websocket connection and its specific UPDATE headers. Look at GET request status code 101 Switching protocol from http to websocket:

Now localhost:8080 is proxied to instances of app by Haproxy load balancer. Take a look at the haproxy config file :

Notice how it proxies traffic from port 8080 to app instances port 8080. Every time we send a message we will see it in messages tab in dev tools:

Remember how we put APP_ID variable to docker-compose? We did that to identify each instance. So when new connection to ws is established we get this APP_ID printed. So we can now know which client is connected to which server. Even if the server is not the same, meaning that different ws connection is used, each subscriber/conversation user gets messages that are sent by each user! It works as we expected!

Code for this post is available on my github repo here ♻️

Read more 🧽

- More about pub/sub and brokers pattern in "Node.js design patterns" book

- By the way web sockets also work over HTTP/2 and there connection uses method called CONNECT. HTTP2 is a multiplexing protocol so it can put multiple requests into one connection making it way more efficient in the backend read more in this spec

- Remember to checkout this amazing explainer on load balancers